Tools in Detail

From WebLichtWiki

Contents[hide] |

Introduction

Computational linguistic tools are programs that perform analyses of linguistic data, or assist in performing such analyses. This section will provide an introduction to the general classes of linguistic tools and what purposes they serve.

Many computational linguistic tools, especially the oldest and most widely-used ones, are extensions of pre-computer techniques used to analyze language. Tokenization, part-of-speech tagging, parsing and word sense disambiguation, as well as many others, all have roots in the pre-computer world, some going back thousands of years. Computational tools automate these long-standing analytical techniques, often imperfectly but still productively.

Other tools, in contrast, are exclusively motivated by the requirements of computer processing of language. Sentence-splitters, bilingual corpus aligners, and named entity recognition, among others, are things that only make sense in the context of computers and have little immediate connection to general linguistics but may be very important for computer applications.

Linguistic tools can also encompass programs designed to enhance access to digital language data. Some of these are extensions of pre-computer techniques like concordances and indexes, but can also include more recent developments like search engines. Textual information retrieval is a large field and this document will discuss only search and retrieval tools specialized for linguistic analysis.

Hierarchies of Linguistic Tools

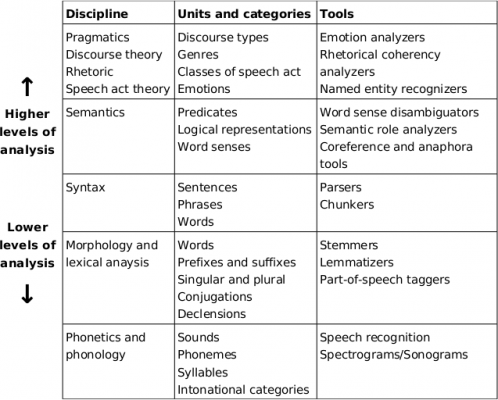

Linguistic tools are often interdependent and frequently incorporate some elements of linguistic theory. Modern linguistics draws on traditions of structuralism, a paradigm and school of thought in the humanities and social sciences dating to the early 20th century. Structuralism emphasized the study of phenomena as hierarchal systems of elements, organized into different levels of analysis, each with their own units, rules,and methodologies. Linguists, in general, organize their theories in ways that show structuralist influences, although many disclaim any attachment to structuralism. Linguists disagree about what levels of analysis exist, to what degree different languages might have differently organized systems of analysis, and how those levels interrelate. However, hierarchal systems of units and levels of analysis are a part of almost all linguistic theories are very heavily reflected in the practices of computational linguists.

Linguistic tools are usually categorized by the level of analysis they perform, and different tools may operate at different levels and over different units. There are often hierarchal interdependencies between tools - a tool used to perform analysis at one level may require, as input, the results of an analysis at a lower level.

Figure 1 is a simplified hierarchy of linguistic units, subdisciplines and tools. It does not provide a complete picture of linguistics and it is not necessarily representative of any specific linguistic school. However, it provides an outline and a reference framework for understanding the way hierarchal dependencies between levels of analysis affect linguistic theories and linguistic tools.

The hierarchal relationship between levels of analysis in Figure 1 generally applies to linguistic tools. Higher levels of analysis generally depend on lower ones. Syntactic analysis like parsing usually requires words to be clearly delineated and part-of-speech tagging or morphological analysis to be performed first. This means, in practice, that texts must be tokenized, their sentences clearly separated from each other, and their morphological properties analyzed before parsing can begin. In the same way, semantic analysis is often dependent on identifying the syntactic relationships between words and other elements, and inputs to semantic analysis tools are often the outputs of parsers. Higher level analyses tend to be dependent on lower level ones.

However, this simplistic picture has many important exceptions. Lower level phenomena often have dependencies on higher level ones. Correctly identifying the part-of-speech, lemmas, and morphological categories of words may depend on a syntactic analysis. Phonetic and phonological analysis can affect with morphological and syntactic analysis. Even speech recognition - one of the lowest level tasks - depends strongly on knowledge of the semantic and pragmatic context of speech.

Furthermore, there is no level of analysis for which all linguists agree on a single standard set of units of analysis or annotation scheme. Different tools will have radically different inputs and outputs depending on the theoretical traditions and commitments of their authors.

Most tools are also language specific. There are few functional generalizations between languages that can be used to develop single tools that apply to multiple languages. Different countries with different languages often have very different indigenous traditions of linguistic analysis, and different linguistic theories are popular in different places, so it is not possible to assume that tools doing the same task for different languages will necessarily be very similar in inputs or outputs.

Corpus and computational linguists often work with written texts, and therefore usually avoid doing any kind phonetic analysis. For that reason, this chapter will not discuss speech recognition and phonetic analysis tools, although some of the multimedia tools discussed in this chapter can involve some phonetic analysis. At the highest levels of analysis, tools are very specialized and standardization is rare, so few classes of very high linguistic level tools are discussed here.

Automatic and Manual Analysis Tools

Although some tools exist to just provide linguists with interfaces to stored language data, many are used to produce an annotated resource. Annotation involves the addition of detailed information to a linguistic resource about its contents. For example, a corpus in which each word is accompanied by a part-of-speech tag, or a phonetic transcription, or in which all named entities are clearly marked, is an annotated corpus. Linguistic data in which the syntactic relationships between words are marked is usually called a treebank. The addition of annotations increases the accessibility and usability of resources for linguists and may be required for further computer processing.

Automatic annotation tools add detailed information to language data on the basis of procedures written into the software, without human intervention other than to run the program. Automatic annotation is sometimes performed by following rules set out by programmers and linguists, but most often, annotation programs are at least partly based on machine learning algorithms and that are trained using manually annotated examples.

Automated annotation processes almost always have some rate of error, and where possible, researchers prefer manually annotated resources with fewer errors. These resources are, naturally, more expensive to construct, rarer and smaller in size than automatically annotated data, but they are essential for the development of automated resources and necessary whenever the desired annotation either has not yet been automated or cannot be automated. Various tools exist to make it easier for people to annotate language data.

Technical issues in linguistic tool management

Many of the most vexing technical issues in using linguistic tools are common problems in computer application development. Data can be stored and transmitted in an array of incompatible formats, and either there are no standards, or too many standards, or standards compliance is poor. Linguistic tool designers are rarely concerned with those kinds of issues or specialized in resolving them.

Many tools accept and produce only plain text data, sometimes with specified delimiters and internal structures accessible to ordinary text editors. For example, some tools require each word to be on separate line, others require each sentence on a line. Some will use comma- or tab-delimited text files to encode annotation in their output, or require them as input. These encodings are often historically rooted in the data storage formats of early language corpora. A growing number of tools use XML for either input or output format.

One of the major axes of difference between annotation programs is inline or stand-off annotation. Inline annotation mixes the data and annotation in a single file or data structure. Stand-off annotation means storing annotations separately, either in a different file, or in some other way apart from language data, with a reference scheme to connect the two.

Character encoding formats are an important technical issue when using linguistic tools. Most languages use some characters other than 7-bit ASCII and there are different standards for characters in different languages and operating systems. Unicode and UTF-8 are increasingly widely used for written language data to minimize these problems, but not all tools support Unicode. Full Unicode compatibility has only recently become available on most operating systems and programming frameworks, and many incompatible linguistic tools are still in use. Furthermore, the Unicode standard supports so many different characters that simple assumptions about texts - what characters constitute punctuation and spaces between words - may differ between Unicode texts in ways incompatible with some tools.

Automatic Annotation Tools

Sentence Splitters

Sentence splitters, sometimes called sentence segmenters, split text up into individual sentences with unambiguous delimiters.

Recognizing sentence boundaries in texts sounds very easy, but it can be a complex problem in practice. Sentences are not clearly defined in general linguistics, and sentence-splitting programs are driven by the punctuation of texts and the practical concerns of computational linguistics, not by linguistic theory.

Punctuation dates back a very long time - at least to the 9th century BCE - but until modern times not all written languages used it. The sentence itself, as a linguistic unit delimited by punctuation, is an invention of 16th century Italian printers and did not reach some parts of the world until the mid-20th century.

In many languages - including most European languages - sentence delimiting punctuation has multiple functions other than just marking sentences. The period (“.”) often marks abbreviations and acronyms as well as being used to write numbers. Sentences can also end with a wide variety of punctuation other than the period. Question marks, exclamation marks, ellipses, colons, semi-colons and a variety of other markers must have their purpose in specific contexts correctly identified before they can be confidently considered sentence delimiters. Additional problems arise with quotes, URLs and proper nouns that incorporate non- standard punctuation. Furthermore, most texts contain errors and inconsistencies of punctuation that simple algorithms cannot easily identify or correct.

Sentence splitters are often integrated into tokenizers, but some separate tools are available. CLARIN- D and WebLicht offer integrated tokenizer/sentence-splitter tools, but no separate sentence-splitting tool at this time.

| Name | Creator | Language | Annotations | Description |

|---|---|---|---|---|

| Tokenizer 2019 | IMS: University of Stuttgart | de fr en hu it sl | tokens, sentences | Czech,Slovenian,Hungarian,Italian,French,German,English tokenizer and sentence boundary detector |

| Tokenizer/Sentences - OpenNLP Project | SfS: Uni-Tuebingen | de en tr | tokens, sentences | Tokenizer/sentences from the OpenNLP project. The 'newlineBounds' parameter treats newlines as a hard break (a sentence boundary). |

| SoMaJo Tokenizer | SfS: Uni-Tuebingen | de | tokens, sentences | SoMaJo is a state-of-the-art tokenizer and sentence splitter for German web and social media texts. You can find more information : https://github.com/tsproisl/SoMaJo |

| ASV Segmentizer (and Tokenizer) | CLARIN-D center, Natural Language Processing Group, University of Leipzig | de | tokens, sentences | The sentence segmentizer used by the Wortschatz project for German texts (also containing a very simple tokenizer) |

| BlingFire Tokenizer | SfS: Uni-Tuebingen | pl ar de fr en hi sv et hu el ru ro be it pt bg uk ur nl cs da es fi la tr vi eu ca zh hr gl he id ga ja kk ko lv lt cu fa sa sk sl ta ug | tokens, sentences | Tokenizer/Sentencer from Microsoft, called BlingFire. It is designed for fast-speed and quality tokenization of natural language. For more information about the quality and efficiency of that tokenizer, please check out the corresponding github page: https://github.com/microsoft/BlingFire . |

| Tokenizer/Sentences - Alpino | SfS: Uni-Tuebingen | nl | tokens, sentences | Tokenizer and sentence splitter from Alpino. |

| Stanford Tokenizer | SfS: Uni-Tuebingen | en | tokens, sentences | Stanford Tokenizer is a an efficient, fast, deterministic tokenizer. |

| Tokenizer and Sentence Splitter | BBAW: Berlin-Brandenburg Academy of Sciences and Humanities | de | tokens, sentences | detects word- and sentence boundaries in raw text using WASTE (http://www.dwds.de/waste/) |

| Tokenizer | IMS: University of Stuttgart | de fr en hu it sl | tokens, sentences | Czech,Slovenian,Hungarian,Italian,French,German,English tokenizer and sentence boundary detector |

Tokenizers

A token is a unit of language akin to a word but not quite the same. In computational linguistics it is often more practical to discuss tokens instead of words, since a token encompasses many linguistically anomalous elements found in actual texts - numbers, abbreviations, punctuation, among other things - and avoids many of the complex theoretical considerations involved in talking about words.

Tokenization is usually understood as a way of segmenting texts rather than transforming them or adding feature information. Each token corresponds to a particular sequence of characters that forms, for the purposes of further research or processing, a single unit. Identifying tokens from digital texts can be complicated, depending on the language of the text and the linguistic considerations that go into processing it.

In modern times, most languages have writing systems derived from ancient languages like Phoenician and Aramaic used by traders in the Middle East and on the Mediterranean Sea starting about 3000 years ago. The Latin, Greek, Cyrillic, Hebrew and Arabic alphabets are all directly derived from a common ancient source, and most historians think the writing systems of India and Central Asia come from the same origin. See [Fischer2005] and [SchmandtBesserat1992] for fuller histories of writing.

The first languages to systematically use letters to represent sounds usually separated words from each other with a consistent mark - generally a bar (“|”) or a double-dot mark much like a colon (“:”). However, many languages with alphabets stopped using explicit word delimiters over time - Latin, Greek, Hebrew and the languages of India were not written with any consistent word marker for many centuries. Whitespace between words was introduced in western Europe in the 12th century, probably invented by monks in Britain or Ireland, and spread slowly to other countries and languages. [Saenger1997] Since the late 19th century, most major languages have been written with regular spaces between words.

For those languages, much but not all of the work of tokenizing digital texts is performed by whitespace characters and punctuation. The simplest tokenizers just split the text up by looking for whitespace, and then separate punctuation from the ends and beginnings of words.

Of major modern languages, only Chinese, Japanese and Korean have writing systems not thought to be derived from a common Middle Eastern ancestor, and they do not systematically mark words in ordinary texts. Tokenization in these languages is a more complex process that can involve large dictionaries and sophisticated machine learning procedures. Vietnamese - which is written with a version of the Latin alphabet today, but used to be written like Chinese - places spaces between every syllable, so that even though it uses spaces, they are of no value in tokenization.

However, even in alphabetic languages with clear word delimiters, tokenization can be very complicated. Usable tokens do not always match the locations of spaces.

Compound words exist in many languages and require more complex processing. The German word Donaudampfschiffahrtselektrizitätenhauptbetriebswerkbauunterbeamtengesellschaft is one famous case, but English has linguistically similar compounds like low-budget and first-class. Tokenizers are often expected to split such compounds up.

In other cases, something best treated as a single token may appear in text as multiple words with spaces, like New York. There are also ambiguous compounds, where they may sometimes appear as separate words and sometimes not. Egg beater, egg-beater and eggbeater are all possible in English and mean the same thing.

Short phrases that are composed of multiple words separated by spaces may also sometimes be best analyzed as a single word, like the phrase by and large or pain in the neck in English. These are called multi-word terms and may overlap with what people usually call idioms.

Contractions like I'm and don't also pose problems, since many higher level analytical tools like part-of- speech taggers and parsers may require them to be broken up, and many linguistic theories treat them as more than one word for grammatical purposes. Phrasal verbs in English like to walk out and separable verbs in German like auffahren are another category of problem for tokenizers, since these are often best treated as single words, but are separated into parts that may not appear near to each other in texts.

Consistent tokenization is generally related to identifying lexical entities that can be looked up in some lexical resource, and this can require very complex processing for ordinary texts. Its purpose is to simplify and standardize the data for the benefit of further processing. Since even digitized texts are very inconsistent in the way they are written, tokenization is the first or nearly the first thing done for any linguistic processing task.

WebLicht currently provides access to a number of tokenizers for different languages. As mentioned in the previous section, tokenization is often combined with sentence-splitting in a single tool.

| Name | Creator | Language | Annotations | Description |

|---|---|---|---|---|

| Tokenizer 2019 | IMS: University of Stuttgart | de fr en hu it sl | tokens, sentences | Czech,Slovenian,Hungarian,Italian,French,German,English tokenizer and sentence boundary detector |

| Tokenizer/Sentences - OpenNLP Project | SfS: Uni-Tuebingen | de en tr | tokens, sentences | Tokenizer/sentences from the OpenNLP project. The 'newlineBounds' parameter treats newlines as a hard break (a sentence boundary). |

| SoMaJo Tokenizer | SfS: Uni-Tuebingen | de | tokens, sentences | SoMaJo is a state-of-the-art tokenizer and sentence splitter for German web and social media texts. You can find more information : https://github.com/tsproisl/SoMaJo |

| ASV Segmentizer (and Tokenizer) | CLARIN-D center, Natural Language Processing Group, University of Leipzig | de | tokens, sentences | The sentence segmentizer used by the Wortschatz project for German texts (also containing a very simple tokenizer) |

| BlingFire Tokenizer | SfS: Uni-Tuebingen | pl ar de fr en hi sv et hu el ru ro be it pt bg uk ur nl cs da es fi la tr vi eu ca zh hr gl he id ga ja kk ko lv lt cu fa sa sk sl ta ug | tokens, sentences | Tokenizer/Sentencer from Microsoft, called BlingFire. It is designed for fast-speed and quality tokenization of natural language. For more information about the quality and efficiency of that tokenizer, please check out the corresponding github page: https://github.com/microsoft/BlingFire . |

| Tokenizer/Sentences - Alpino | SfS: Uni-Tuebingen | nl | tokens, sentences | Tokenizer and sentence splitter from Alpino. |

| Tokenizer - OpenNLP Project | SfS: Uni-Tuebingen | de en | tokens | Tokenizer from the OpenNLP Project |

| Stanford Tokenizer | SfS: Uni-Tuebingen | en | tokens, sentences | Stanford Tokenizer is a an efficient, fast, deterministic tokenizer. |

| Tokenizer and Sentence Splitter | BBAW: Berlin-Brandenburg Academy of Sciences and Humanities | de | tokens, sentences | detects word- and sentence boundaries in raw text using WASTE (http://www.dwds.de/waste/) |

| Tokenizer | IMS: University of Stuttgart | de fr en hu it sl | tokens, sentences | Czech,Slovenian,Hungarian,Italian,French,German,English tokenizer and sentence boundary detector |

Part-of-Speech (PoS) Taggers

Part-of-speech taggers are programs that take tokenized texts as input and associate a part-of-speech tag (PoS) with the tokens. Each PoS-tagger uses a specific, closed set of parts-of-speech - usually called a tagset in computational linguistics, and different taggers will routinely have different, sometimes radically different, part-of-speech systems.

A part-of-speech is a category that abstracts some of the properties of words or tokens. For example, in the sentence “The dog ate dinner” there are other words we can substitute for dog and still have a correct sentence, words like cat, or man. Those words have some common properties and belong to a common category of words. PoS schemes are designed to capture those kinds of similarities. Words with the same PoS are in some sense similar in their use, meaning, or function.

Parts-of-speech have been independently invented at least three times in the distant past. The 2nd century BCE Greek grammar text The Art of Grammar outlined a system of nine PoS categories that became very influential in European languages: nouns, verbs, participles, articles, pronouns, prepositions, adverbs, and conjunctions, with proper nouns as a subcategory of nouns. Most PoS systems in use today have been influenced by that scheme.

PoS tagets also differ in the level of detail they provide. A modern corpus tagset, like the CLAWS tagset used for the British National Corpus - can go far beyond the classical nine parts-of-speech and make dozens of fine distinctions. CLAWS version 7 has 22 different parts-of-speech for common nouns alone! http://www.natcorp.ox.ac.uk/docs/c7spec.html Complex tagsets are usually organized hierarchically, to reflect commonalities between different classes of words.

Examples of widely used tagsets include STTS (Stuttgart-Tübingen Tagset for German), and the Penn Treebank Tagset (for English). Most PoS tagsets were devised for specific corpora, and are often inspired by older corpora and PoS schemes.

There is also the Universal Dependencies, or "UD" tagset, part of the Universal Dependencies annotation scheme, an annotation scheme which was conceived to be applicable across all human languages - https://universaldependencies.org/

PoS taggers are usually machine learning applications that have been trained with data from a particular corpus that has had PoS tags added by human annotators. PoS taggers are often flexible enough to be retrained for new languages. Given a large manually tagged corpus in a particular language, taggers can be retrained to produce similar output from tokenized data.

PoS taggers almost always expect tokenized texts as input, and it is important that the tokens in texts match the ones the PoS tagger was trained to recognize. As a result, it is important to make sure that the tokenizer used to preprocess texts matches the one used to create the training data for the PoS tagger.

One of the more important factors to consider in evaluating a PoS tagger is its handling of out-of-vocabulary words. A significant number of tokens in any large text will not be recognized by the tagger, no matter how large a dictionary they have or how much training data was used. PoS taggers may simply output a special “unknown” tag, or may guess what the right PoS should be given the remaining context. For some languages, especially those with complex systems of prefixes and suffixes for words, PoS taggers may use morphological analyses to try to find the right tag.

| Name | Creator | Language | Annotations | Description |

|---|---|---|---|---|

| Part-of-Speech Tagger | BBAW: Berlin-Brandenburg Academy of Sciences and Humanities | de | lemmas, postags | http://zwei.dwds.de/d/suche#stts |

| POS Tagger - OpenNLP Project | SfS: Uni-Tuebingen | de en it | postags | POS Tagger from the OpenNLP Project The model for Italian is trained on a relatively small training corpus (MIDT) and should therefore be considered experimental. |

| Charniak Parser +POS | SfS: Uni-Tuebingen | en | postags, parsing | BLLIP Parser is a statistical natural language parser including a generative constituent parser (first-stage) and discriminative maximum entropy reranker (second-stage). This service comes with the default model provided by BLLIP parser |

| SyntaxDot-Dutch | SfS: Uni-Tuebingen | nl | lemmas, postags, morphology, depparsing | This service is neural syntax annotator for Dutch based on deep transformer networks. It produces part-of- speech tag, lemma, morphology and dependency annotations. For more information please check out the corresponding github page: https://github.com/tensordot/syntaxdot |

| CAB historical text analysis | BBAW: Berlin-Brandenburg Academy of Sciences and Humanities | de | lemmas, postags, orthography | orthographic normalization, PoS-tagging, and lemmatization for historical German using http://www.deutschestextarchiv.de/public/cab/ (tagset: http://zwei.dwds.de/d/suche#stts) |

| Berkeley Parser - Berkeley NLP +POS | SfS: Uni-Tuebingen | de en | postags, parsing | Constituent Parser from the Berkeley NLP Project |

| SyntaxDot-German | SfS: Uni-Tuebingen | de | lemmas, postags, morphology, depparsing, namedentities, topologicalfields | This service is neural syntax annotator for German based on deep transformer networks. It produces part-of- speech tag, lemma, morphology, named entities, topological fields and dependency annotations. For more information please check out the corresponding github page: https://github.com/tensordot/syntaxdot |

| TreeTagger | IMS: University of Stuttgart | de fr en it | lemmas, postags | Italian,English,French,German part-of-speech tagger and lemmatiser |

| TreeTagger 2013 | IMS: University of Stuttgart | de fr en it | lemmas, postags | Italian,English,French,German part-of-speech tagger and lemmatiser |

| Sticker part-of-speech tagger UD | SfS: Uni-Tuebingen | de nl | postags | This service uses the neural network based part-of-speech tagger Sticker. It tags German and Dutch text and outputs universal part-of-speech tags. It is very fast and produces high-quality annotations. For more information please check out the corresponding github page: https://github.com/stickeritis/sticker |

| Jitar POS Tagger | SfS: Uni-Tuebingen | de en | postags | Jitar is a Hidden Markov Model part-of-speech tagger developed by Daniël de Kok, that implements the architecture of TnT as an open source package. |

| Sticker STTS-part-of-speech tagger | SfS: Uni-Tuebingen | de | postags | This service uses the neural network based part-of-speech tagger Sticker. It tags German text with STTS-part-of-speech-tags. It is very fast and produces high-quality annotations. For more information please check out the corresponding github page: https://github.com/stickeritis/sticker |

| SMOR lemmatizer | IMS: University of Stuttgart | de | lemmas, postags | Program producing possible STTS tags and lemmas for a given list of words |

| Stuttgart Dependency Parser | IMS: University of Stuttgart | de | lemmas, postags, depparsing | |

| Constituent Parser - OpenNLP Project | SfS: Uni-Tuebingen | en | postags, parsing | Constituent Parser from the OpenNLP Project |

| RFTagger | IMS: University of Stuttgart | de | postags | German part-of-speech tagger using a fine-grained POS tagset |

| Stanford Parser | SfS: Uni-Tuebingen | en | depparsing, postags, parsing | This Stanford Parser service annotates text with both constituency and dependency parses. |

| Stanford Part-of-Speech tagger | SfS: Uni-Tuebingen | en | postags | |

| Stanford Phrase Structure Parser | SfS: Uni-Tuebingen | de en | postags, parsing | |

| Stanford Dependency Parser | SfS: Uni-Tuebingen | en | depparsing, postags |

Morphological analyzers and lemmatizers

Introduction

Morphology is the study of how words and phrases change in form depending on their meaning, function and context. Morphological tools sometimes overlap in their functions with PoS taggers and tokenizers.

Because linguists do not always agree on what is and is not a word, different linguists may disagree on what phenomena are part of morphology, and which ones are part of syntax, phonetics, or other parts of linguistics. Generally, morphological phenomena are considered either inflectional or derivational depending on their role in a language.

Inflectional morphology

Inflectional morphology is the way words are required to change by the rules of a language depending on their syntactic role or meaning. In most European languages, many words have to change form depending on the way they are used.

How verbs change in form is traditionally called conjugation. In English, present tense verbs have to be conjugated depending on their subjects. So we say “I play”, “you play”, “we play” and “they play” but “he plays” and “she plays”. This is called agreement, and we say that in English, present tense verbs must agree with their subjects, because the properties of whatever the subject of the verb is determine the form the verb takes. But in the past tense, English verbs have to take a special form to indicate that they refer to the past, but do not have to agree. We say “I played” and “he played”.

Languages can have very complex schemes that determine the forms of verbs, reflecting very fine distinctions of meaning and requiring agreement with many different features of their subjects, objects, modifiers or any other part of the context in which they appear. These distinctions are sometimes expressed by using additional words (usually called auxiliaries or sometimes helper words), and sometimes by prefixes, suffixes, or other changes to words. Many languages also combine schemes to produce potentially unlimited variations in the forms of verbs.

Nouns in English change form to reflect their number: “one dog” but “several dogs”. A few words in English also change form based on the gender of the people they refer to, like actor/actress, but these are rare in English. In most European languages, all nouns have an inherent grammatical gender and nouns referring to people or animals must almost always change form to reflect their gender.

In many languages, nouns also undergo much more complex changes to reflect their grammatical function. This is traditionally called declension. In German, for example: “Das Auto des Lehrers ist grün.” The teacher's car is green. The word “Lehrer” - meaning teacher - is changed to “Lehrers” because it is being used to say whose car is meant.

Agreement is often present between nouns and words that are connected to them grammatically. In German, nouns undergo few changes in form when declined, but articles and adjectives used with them do. Articles and adjectives in languages with grammatical gender categories usually must also change form to reflect the gender of the nouns they refer to. Pronouns, in most European languages, also must agree with the linguistic properties of the things they refer to as well as being declined or transformed by their context in other ways.

The comparative and superlative forms of adjectives - safe, safer, safest is one example - are also usually thought of as inflectional morphology.

Some languages have very complex inflectional morphologies. Among European languages, Finnish and Hungarian are known to be particularly complex, with many forms for each verb and noun and complex rules of agreement. Others are very simple - English nouns only vary between singular and plural, and even pronouns and irregular verbs like “to be” and “to have” usually have no more than a handful of specific forms. Some languages - mostly not spoken in Europe - are not thought of as having any inflectional morphological variation at all.

Just because a word has been inflected does not mean it is a different word. In English, “dog” and “dogs” are not different words just because of the -s added to indicate the plural. For each surface form, there is a “true” word that it refers to, independently of its morphology. This underlying abstraction is called its lemma, from a word the ancient Greeks used to indicate the “substance” of a word. Sometimes, it is called the canonical form of a word, and indicates the spelling you would find in a dictionary.

Inflectional morphology in European languages most often means changing the endings of words, either by adding to them or by modifying them in some way. But, inflectional morphology can involve changing any part of a word. In English, some verbs are inflected by changing one or more of their vowels, like break, broke, and broken. In German many common verbs - called the strong verbs - vary by changing the middle of the word when they are inflected. In Arabic and Hebrew, all nouns and verbs and many other words are inflected by changing the vowels in the middle of the word. In other languages - like the Bantu languages in Africa - words are inflected by adding prefixes and changing the beginnings of words. Other languages indicate inflection by inserting whole syllables in the middle of words, repeating parts of words, or almost any other imaginable variation. Many languages use more than one way of doing inflection.

Derivational morphology

Derivational morphology is the process of making a word from another word, usually while changing its form while also changing its meaning or grammatical function in some way. This can mean adding prefixes or suffixes to words, like the way English constructs the noun happiness and the adverb unhappily from the adjective happy. These kinds of processes are often used to change the part-of-speech or syntactic functions of words - making a verb out of a noun, or an adjective out of an adverb, etc. But sometimes they are used only to change the meaning of a word, like adding the prefix un- in both English and German, which negates or inverts the meaning of a word in some way but does not change its grammatical properties.

As with inflectional morphology, languages may use almost any kind of variation to derive new words from old ones.

One common process found in many different languages is to make compound words. German famously creates very long words this way, and English has many compound constructs that are sometimes written as one word, or with a hyphen, or as separate words that people understand to have a single common meaning. The opposite process - splitting a single word into multiple parts - also exists in some languages. Phrasal verbs in English and separable verbs in German are two examples, and a morphological analyzer may have to identify those constructions and handle them appropriately.

Stemmers

One of the oldest and simplest tools for computational morphological analysis is the stemmer. Since most inflectional morphology in European languages is indicated by suffixes, a stemmer is a program that recognizes common inflectional endings to words and cuts them off. Stemmers were developed originally to improve information retrieval and are usually very simple programs that use a catalog of regular expressions to simplify the word-forms found in digital texts. They are not very linguistically sophisticated, and miss many kinds of morphological variation. Whenever possible, more sophisticated tools are preferred.

However, stemmers are very easy to make for new languages and are often used when better tools are unavailable. The Porter Stemmer [Porter1980] is the classical easy-to-implement algorithm for stemming.

Lemmatizers

Lemmatizers are programs that take tokenized texts as input and return a set of lemmas. They usually use a combination of rules for decomposing words and a dictionary. Some lemmatizers also return information about the surface form of the word, usually just enough to reconstruct the surface form, but not as much as a full morphological analysis.

| Name | Creator | Language | Annotations | Description |

|---|---|---|---|---|

| MorphAdorner Lemmatizer | SfS: Uni-Tuebingen | en | lemmas | A Lemmatizer for English from MorphAdorner |

| Part-of-Speech Tagger | BBAW: Berlin-Brandenburg Academy of Sciences and Humanities | de | lemmas, postags | http://zwei.dwds.de/d/suche#stts |

| SepVerb Lemmatizer (using dependencies) | SfS: Uni-Tuebingen | de | lemmas | SepVerb Lemmatizer for German language annotates tokens with lemmas. Separable verb lemmas are identified and merged into one lemma. The lemmatizer uses MATE to produce lemmas, then uses parsing and part-of-speech annotations from the input to correct lemmas and identify separable verbs. |

| SyntaxDot-Dutch | SfS: Uni-Tuebingen | nl | lemmas, postags, morphology, depparsing | This service is neural syntax annotator for Dutch based on deep transformer networks. It produces part-of- speech tag, lemma, morphology and dependency annotations. For more information please check out the corresponding github page: https://github.com/tensordot/syntaxdot |

| CAB historical text analysis | BBAW: Berlin-Brandenburg Academy of Sciences and Humanities | de | lemmas, postags, orthography | orthographic normalization, PoS-tagging, and lemmatization for historical German using http://www.deutschestextarchiv.de/public/cab/ (tagset: http://zwei.dwds.de/d/suche#stts) |

| SyntaxDot-German | SfS: Uni-Tuebingen | de | lemmas, postags, morphology, depparsing, namedentities, topologicalfields | This service is neural syntax annotator for German based on deep transformer networks. It produces part-of- speech tag, lemma, morphology, named entities, topological fields and dependency annotations. For more information please check out the corresponding github page: https://github.com/tensordot/syntaxdot |

| TreeTagger | IMS: University of Stuttgart | de fr en it | lemmas, postags | Italian,English,French,German part-of-speech tagger and lemmatiser |

| SepVerb Lemmatizer | SfS: Uni-Tuebingen | de | lemmas | SepVerb Lemmatizer for German language annotates tokens with lemmas. Separable verb lemmas are identified and merged into one lemma. The lemmatizer uses MATE to produce lemmas, then uses parsing and part-of-speech annotations from the input to correct lemmas and identify separable verbs. |

| TreeTagger 2013 | IMS: University of Stuttgart | de fr en it | lemmas, postags | Italian,English,French,German part-of-speech tagger and lemmatiser |

| SMOR lemmatizer | IMS: University of Stuttgart | de | lemmas, postags | Program producing possible STTS tags and lemmas for a given list of words |

| Stuttgart Dependency Parser | IMS: University of Stuttgart | de | lemmas, postags, depparsing |

Morphological analyzers

Full morphological analyzers take tokenized texts as input and return complete information about the inflectional categories each token belongs to, as well as their lemma. They are often combined with PoS taggers and sometimes with syntactic parsers, because a full analysis of the morphological category of a word usually touches on syntax and almost always involves categorizing the word by its part-of-speech.

Some analyzers may also provide information about derivational morphology and break up compounds into constituent parts.

High-quality morphological analyzers almost always use large databases of words and rules of composition and decomposition. Many also employ machine learning techniques and have been trained from manually analyzed data. Developing comprehensive morphological analyzers is very challenging, especially if derivational phenomena are to be analyzed, and automatic tools will always make some mistakes.

| Name | Creator | Language | Annotations | Description |

|---|---|---|---|---|

| Morphology analysis service using MorphAdorner | SfS: Uni-Tuebingen | en | morphology | |

| SyntaxDot-Dutch | SfS: Uni-Tuebingen | nl | lemmas, postags, morphology, depparsing | This service is neural syntax annotator for Dutch based on deep transformer networks. It produces part-of- speech tag, lemma, morphology and dependency annotations. For more information please check out the corresponding github page: https://github.com/tensordot/syntaxdot |

| SyntaxDot-German | SfS: Uni-Tuebingen | de | lemmas, postags, morphology, depparsing, namedentities, topologicalfields | This service is neural syntax annotator for German based on deep transformer networks. It produces part-of- speech tag, lemma, morphology, named entities, topological fields and dependency annotations. For more information please check out the corresponding github page: https://github.com/tensordot/syntaxdot |

| Morphology | IMS: University of Stuttgart | de | morphology | German Morphology |

| SMOR | IMS: University of Stuttgart | de | morphology | German Finite-State Morphology SMOR |

Syntax - Parsers and Chunkers

Introduction

Syntax is the study of the connections between parts of sentences. It is designed to account for the meaningful aspects of the ordering of words and phrases in language. The principles that determine which words and phrases are connected, how they are connected, and what effect that has on the ordering of the parts of sentences are called a grammar.

There are many different theories of syntax and ideas about how syntax works. Individual linguists are usually attached to particular schools of thought depending on where they were educated, what languages and problems they work with, and to an important degree their personal preferences.

Theories of syntax fall into two broad categories that reflect different histories, priorities and theories about how language works: dependency grammars and constituency grammars. The divide between these two approaches dates back to their respective origins in the late 1950s, and the debate between them is still active. Both schemes represent the connections within sentences as trees or directed graphs, and both schools agree that representing those connections requires non-obvious data structures.

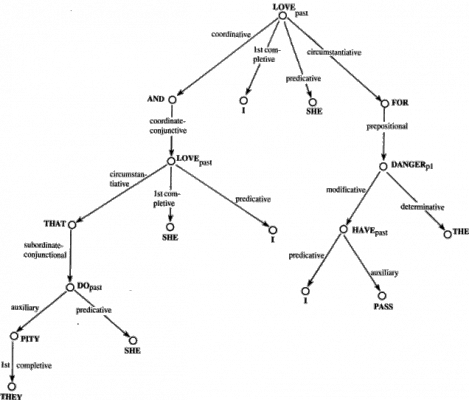

Dependency grammar

In dependency grammars, connections are usually between words or tokens, and the edges that join them have labels from a small closed sets of connection types. Figure 2 is a dependency analysis from one particular dependency grammar theory.

Dependency grammars tend to be popular among people working with languages in which word order is very flexible and words are subject to complex morphological agreement rules. It has a very strong tradition in Eastern Europe, but is also present among western European and Asian linguists to varying degrees in different countries.

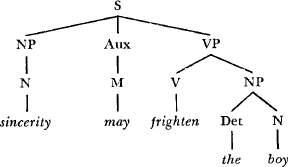

Constituency grammar

Constituency grammars view the connections between words as a hierarchal relationship between phrases. It breaks sentences up into parts, usually but not always continuous ones, and then breaks each part down into smaller parts, until it reaches the level of individual tokens. The trees drawn to demonstrate constituency grammars reflect this structure. Edges in constituency grammars are not usually labeled.

Constituency grammar draws heavily on the theory of formal languages in computer science, but tends to use formal grammar in conjunction with other notions to better account for phenomena in human language. As a school of thought, it is historically associated with the work of Noam Chomsky and the American linguistic tradition. It tends to be popular among linguists working in languages like English, in which morphological agreement is not very important and word orders are relatively strictly fixed. It is very strong in English-speaking countries, but is also present in much of western Europe and to varying degrees in other parts of the world.

Figure 3 is an example of a constituency analysis of an English sentence, from one of Noam Chomsky's books.

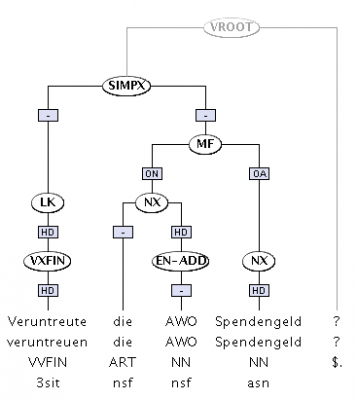

Hybrid grammar

Among computational linguists and people working in natural language processing, there is a growing tendency to use hybrid grammars that combine elements of both the constituency and dependency traditions. These grammars take advantage of a property of constituency grammars called headedness. In many constituency frameworks, the phrases identified by the grammar have a single constituent that is designated as its head. A constituency analysis where all phrases have heads, and where all edges have labels, is broadly equivalent to a dependency analysis.

Figure 4 is a tree from the TüBa-D/Z treebank of German. [Hinrichs2004] This treebank uses a hybrid analysis of sentences, containing constituents that often have heads and edges with dependency labels. As with many hybrid analyses, not all constituents have heads, and some edges have empty labels, so it is not completely compatible with a strict dependency framework. However, the added information in the edges also makes it incompatible with a strict constituency framework. This kind of syncretic approach tends to find more acceptance in corpus and computational linguistics than in theoretical linguistics.

Parsers

Parsing is the process (either automated or manual) of performing syntactic analysis. A computer program that parses natural language is called a parser. Superficially, the process of parsing natural language resembles the operation of a parser in computer science, and computing has borrowed much of its vocabulary for parsing from linguistics. But in practice, natural language parsing is a very different undertaking.

The oldest natural language parsers were constructed using the same kinds of finite-state grammars and recognition rules as parsing computer commands, but the diversity and complexity of human language makes those kinds of parsers fragile and difficult to construct. The most accurate and robust parsers today incorporate statistical principles to some degree, and have often been trained from manually parsed texts using machine learning techniques.

Parsers usually require some preprocessing of the input text, although some are integrated applications that perform preprocessing internally. Generally, the input to a parser must have sentences clearly delimited, and must usually be tokenized. Many common parsers work with a PoS-tagger as a preprocessor, and the output of the PoS-tagger must use the tags expected by the parser. When parsers are trained using machine learning, the preprocessing must match the preprocessing used for the training data.

The output of a parser is never simply plain text - the data must be structured in a way that encodes the connections between words that are not next to each other.

| Name | Creator | Language | Annotations | Description |

|---|---|---|---|---|

| MaltParser | SfS: Uni-Tuebingen | de | depparsing | MaltParser is a system for data-driven dependency parsing, which can be used to induce a parsing model from treebank data and to parse new data using an induced model. MaltParser is developed by Johan Hall, Jens Nilsson and Joakim Nivre at Växjö University and Uppsala University, Sweden. |

| TurboParser | SfS: Uni-Tuebingen | en | depparsing | A multilingual dependency parser based on linear programming relaxations. |

| MaltParser | SfS: Uni-Tuebingen | it | depparsing | MaltParser is a system for data-driven dependency parsing, which can be used to induce a parsing model from treebank data and to parse new data using an induced model. MaltParser is developed by Johan Hall, Jens Nilsson and Joakim Nivre at Växjö University and Uppsala University, Sweden. The model for Italian was trained on the MIDT corpus is *very* experimental. |

| Turkish dependency parsing (Malt) | SfS: Uni-Tuebingen | tr | depparsing | Turkish dependency parsing service using Malt parser. The service is trained on METU-Sabancı treebank. |

| SyntaxDot-Dutch | SfS: Uni-Tuebingen | nl | lemmas, postags, morphology, depparsing | This service is neural syntax annotator for Dutch based on deep transformer networks. It produces part-of- speech tag, lemma, morphology and dependency annotations. For more information please check out the corresponding github page: https://github.com/tensordot/syntaxdot |

| SyntaxDot-German | SfS: Uni-Tuebingen | de | lemmas, postags, morphology, depparsing, namedentities, topologicalfields | This service is neural syntax annotator for German based on deep transformer networks. It produces part-of- speech tag, lemma, morphology, named entities, topological fields and dependency annotations. For more information please check out the corresponding github page: https://github.com/tensordot/syntaxdot |

| Sticker universal dependency parser | SfS: Uni-Tuebingen | de nl | depparsing | This service uses the neural network based dependency parser Sticker. It parses German and Dutch text and outputs universal dependencies. It is very fast and produces high-quality annotations. For more information please check out the corresponding github page: https://github.com/stickeritis/sticker |

| SynCoP - Dependency Parser | BBAW: Berlin-Brandenburg Academy of Sciences and Humanities | de | depparsing | http://www.ims.uni-stuttgart.de/forschung/ressourcen/lexika/TagSets/stts-table.html |

| Stuttgart Dependency Parser | IMS: University of Stuttgart | de | lemmas, postags, depparsing | |

| Stanford Parser | SfS: Uni-Tuebingen | en | depparsing, postags, parsing | This Stanford Parser service annotates text with both constituency and dependency parses. |

| Stanford Dependency Parser | SfS: Uni-Tuebingen | en | depparsing, postags | |

| English Dependency Parser (Malt) | SfS: Uni-Tuebingen | en | depparsing | MaltParser is a system for data-driven dependency parsing, which can be used to induce a parsing model from treebank data and to parse new data using an induced model. MaltParser is developed by Johan Hall, Jens Nilsson and Joakim Nivre at Växjö University and Uppsala University, Sweden. |

| Name | Creator | Language | Annotations | Description |

|---|---|---|---|---|

| Charniak Parser (needs POS) | SfS: Uni-Tuebingen | en | parsing | BLLIP Parser is a statistical natural language parser including a generative constituent parser (first-stage) and discriminative maximum entropy reranker (second-stage). This service comes with the default model provided by BLLIP parser |

| Charniak Parser +POS | SfS: Uni-Tuebingen | en | postags, parsing | BLLIP Parser is a statistical natural language parser including a generative constituent parser (first-stage) and discriminative maximum entropy reranker (second-stage). This service comes with the default model provided by BLLIP parser |

| Constituent Parser | IMS: University of Stuttgart | de en | parsing | German,English constituent parser |

| Berkeley Parser - Berkeley NLP +POS | SfS: Uni-Tuebingen | de en | postags, parsing | Constituent Parser from the Berkeley NLP Project |

| Berkeley Parser - Berkeley NLP (needs POS) | SfS: Uni-Tuebingen | de en | parsing | Constituent Parser from the Berkeley NLP Project, trained on Tüba DZ. Part-of-speech annotations from input are used in parsing. |

| Constituent Parser - OpenNLP Project | SfS: Uni-Tuebingen | en | postags, parsing | Constituent Parser from the OpenNLP Project |

| Stanford Parser | SfS: Uni-Tuebingen | en | depparsing, postags, parsing | This Stanford Parser service annotates text with both constituency and dependency parses. |

| Stanford Phrase Structure Parser | SfS: Uni-Tuebingen | de en | postags, parsing |

Chunkers

High-quality parsers are very complex programs that are very difficult to construct and are very computationally expensive. For many languages there are no good parsers at all.

Chunkers, also known as shallow parsers, are a more lightweight solution. [Abney1991] They provide a partial and simplified parse, often simply breaking sentences up into clauses by looking for certain kinds of words that often indicate the beginning of a phrase. They are much simpler to write and much less resource-intensive to run than full parsers and are available in many languages.

Named Entity Recognition

Named entities are a generalization of the idea of a proper noun. They refer to names of people, places, non-generic things, brand names, and sometimes to highly subject-specific terms, among many other possibilities. There is no fixed limit to what constitutes a named entity, but these kinds of highly specific usages constitute a large share of the words in texts that are not in dictionaries and not correctly recognized by linguistic tools. They can be very important for information retrieval, machine translation, topic identification and many other tasks in computational linguistics.

Named entity recognition tools can be based on rules, on statistical methods and machine learning algorithms, or on combinations of those methods. The rules they apply are sometimes very ad hoc - like looking for sequences of two or more capitalized words - and do not generally follow from any organized linguistic theory. Large databases of names of people, places and things usually also form a part of a named entity recognition tool.

Named entity recognition tools sometimes also try to classify the elements they find. Determining whether a particular phrase refers to a person, a place, a company name or other thing can be important for research or further processing.

| Name | Creator | Language | Annotations | Description |

|---|---|---|---|---|

| Person Name Recognizer | BBAW: Berlin-Brandenburg Academy of Sciences and Humanities | de | namedentities | person name recognizer and annotator for historical German (grammar optimized for journals and high precision) based on weighted finite state transducers |

| OpenNLP Named Entity Recognizer | SfS: Uni-Tuebingen | en es | namedentities | |

| Illinois Named Entity Recognizer | SfS: Uni-Tuebingen | en | namedentities | This is a state of the art NER tagger that tags plain text with named entities. The newest version tags entities with the "classic" 4-label type set (people / organizations / locations / miscellaneous) |

| Sticker Named Entity Recognizer | SfS: Uni-Tuebingen | de nl | namedentities | This service is built on a neural network based sequence labeller that can label named entities for German and Dutch. It produces state-of-the art results. For more information about the sticker sequence labeller, have a look at the github page: https://github.com/stickeritis/sticker |

| OpenNLP Externally Trained Named Entity Recognizer | SfS: Uni-Tuebingen | de en | namedentities | OpenNLP Named Entity Recognizer that uses an external OpenNLP model to annotate named entities. |

| SyntaxDot-German | SfS: Uni-Tuebingen | de | lemmas, postags, morphology, depparsing, namedentities, topologicalfields | This service is neural syntax annotator for German based on deep transformer networks. It produces part-of- speech tag, lemma, morphology, named entities, topological fields and dependency annotations. For more information please check out the corresponding github page: https://github.com/tensordot/syntaxdot |

| German Named Entity Recognizer | SfS: Uni-Tuebingen | de | namedentities | German Named Entity Recognizer trained based on maximum entropy approach using OpenNLP maxent library. Two models are available: one is trained on CoNLL2003 training set (conll), another one is trained on TuebaDZ corpus release 8 (tuebadz). |

| German NER | IMS: University of Stuttgart | de | namedentities | Named Entity Recognition for German based on Stanford Tools |

| Stanford Named Entity Recognizer | SfS: Uni-Tuebingen | en | namedentities | Stanford NER is a Java implementation of a Named Entity Recognizer. Named Entity Recognition (NER) labels sequences of words in a text which are the names of things, such as person and company names. This service includes the models provided by Stanford: CoNLL 2003, MUC, and a model that has the intersection of CoNLL and MUC labels and the union of their data. |

| Academic Named Entity Recognizer | SfS: Uni-Tuebingen | de | namedentities | This service performs academia-related Named Entity Recognition for German. The fine-tuned BERT model tags entities in the context of academics: persons, institutions, and research areas/disciplines. For more information see Semiautomatic Data Generation for Academic Named Entity Recognition in German Text Corpora |

Geolocation

Geolocation is the identification of geographical coordinates of entities. For example, named entities of location type can be assigned longitude and latitude, as well as other geographical information. Knowing that a named entity refers to a particular place can make it much easier for computers to disambiguate other references. A reference to Bismarck near a reference to a place in Germany likely refers to refer to the 19th century German politician Otto von Bismarck, but near a reference to North Dakota, it likely refers to the small American city of Bismarck.

A Geolocation tool needs a database of the names of locations with their geographical coordinates. Some databases are available as web services on the net (for example http://www.geonames.org/), and other organizations offer this kind of data to be downloaded as a database dump.

| Name | Creator | Language | Annotations | Description |

|---|---|---|---|---|

| Geolocation | SfS: Uni-Tuebingen | de en es | geo | Assigns geographical coordinates to named entities |

Tools for querying corpora

A variety of services provide specialized query languages for querying a specific linguistic corpus or corpora. The query results are annotated in the returned data, which can then be further analyzed with other tools.

| Name | Creator | Language | Annotations | Description |

|---|---|---|---|---|

| dlexDB Lemmas | BBAW: Berlin-Brandenburg Academy of Sciences and Humanities | de | entries | Filter query against dlexDB Lemmas |

| Dingler-Corpus Query | BBAW: Berlin-Brandenburg Academy of Sciences and Humanities | de | tokens, lemmas, sentences, postags, matches, orthography | http://zwei.dwds.de/d/suche#stts |

| Cosmas2 Goethe corpus query | IDS: Institut für Deutsche Sprache | de | text, tokens, sentences, matches | |

| C4-Corpus Query | BBAW: Berlin-Brandenburg Academy of Sciences and Humanities | de | tokens, lemmas, sentences, postags, matches | http://zwei.dwds.de/d/suche#stts |

| Federated Content Search (FCS) | Leibniz-Institut für Deutsche Sprache (IDS) Mannheim | text, tokens, sentences, matches | Search on corpus data available in CLARIN centers providing FCS endpoints. | |

| DTA-Corpus Query | BBAW: Berlin-Brandenburg Academy of Sciences and Humanities | de | tokens, lemmas, sentences, postags, matches, orthography | http://zwei.dwds.de/d/suche#stts |

| CQP Query | SfS: Uni-Tuebingen | de | text, tokens, lemmas, sentences, postags, matches | Query a corpus using CQP |

| dlexDB Types | BBAW: Berlin-Brandenburg Academy of Sciences and Humanities | de | entries | Filter query against dlexDB Types |