Using TCFXB 0.3 Tutorial

How to use the TCF0.3 - Java objects binding library to consume and produce TCF0.3 documents

Introduction

Producing linguistic annotations in TCF from scratch

Consuming and producing linguistic annotations from/into TCF

Introduction

In order to participate in WebLicht's tool-chaining, the input/output format of the services must be compatible with each other. WebLicht services use an XML-based exchange format called TCF as such an interoperable machine-readable format.

A developer of a service that takes TCF input and outputs TCF has two options:

- The first option is to parse the TCF document, extract the necessary annotations, process them to build new annotations layer, and add new annotations to the document according to the TCF specifications. For creating and parsing TCF, one can use a DOM parser or a StAX parser. A StAX parser is the preferable option in most cases because a DOM parser holds an entire document in memory, while with a StAX parser the developer can decide which pieces of information are saved and how they are saved, having fine control over parsing efficiency and memory use.

- The second option is to use the TCF binding library which we offer for the developers of WebLicht services. It abstracts the developer from the particular format of TCF and its parsing. The library binds linguistic data, represented by XML elements and attributes in TCF, to linguistic data represented by Java objects accessed from a TextCorpusData object. The library interface allows for easy access to the linguistic annotations in TCF and easy addition of new linguistic annotations. Thus, the developer can extract/create linguistic annotations from/in a TextCorpusData Java object directly, without dealing with XML, since a TCF document will be read/written automatically.

This tutorial is devoted to the second option and explains how to use the TCF binding library. To follow the tutorial you need to download TCFXB0.3 tutorial samples. After unzipping the tutorial, you will find the following folders:

- /src (source code)

- /data (sample TCF documents)

- /lib (the binding library)

- /doc (binding library API docs)

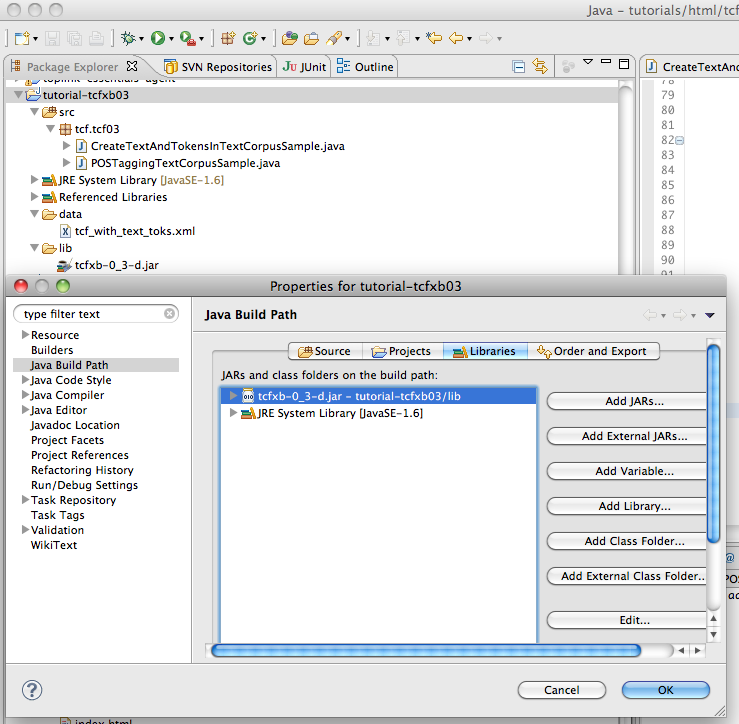

Create a Java project with these data and set your project classpath to point to the jars in the /lib folder. For example in Eclipse, the sample project and its Java Build path should look like this:

Two sample classes provided in the tutorial correspond to two use cases:

- You have plain-text input and want to produce linguistic annotations in TCF

- You have TCF input with linguistic annotations, want to process it and add new linguistic annotations into the TCF document

Producing linguistic annotations in TCF from scratch

CreateTextAndTokensInTextCorpusSample.java (in the src/ folder) shows how to create a new TCF document when you have no TCF input to process. For simplicity in this example, we will hard-code the linguistic annotations in Java and create a new TCF document with these linguistic annotations.

The linguistic annotations we are going to add into the TCF are text and tokens annotations for the German language ("de"):

String myText = "Karin fliegt nach New York. Sie will dort Urlaub machen.";

String[] myTokens = new String[]{"Karin", "fliegt", "nach", "New", "York",

".", "Sie", "will", "dort", "Urlaub", "machen", "."};

The resulting TCF document we are going to save in:

String fileNameOut = "data/OUT-tcf_with_text_toks.xml";

Use a CreateTextAndTokensInTextCorpusSample object to create a TCF document:

CreateTextAndTokensInTextCorpusSample sample = new CreateTextAndTokensInTextCorpusSample();

sample.process("de", myText, myTokens, fileNameOut);

Compile and run the CreateTextAndTokensInTextCorpusSample class.

It produces the valid TCF output that you can inspect in data/OUT-tcf_with_text_toks.xml:

<?xml version="1.0" encoding="UTF-8"?> <D-Spin xmlns="http://www.dspin.de/data" version="0.3"> <MetaData xmlns="http://www.dspin.de/data/metadata"> <source></source> </MetaData> <TextCorpus xmlns="http://www.dspin.de/data/textcorpus" lang="de"> <text>Karin fliegt nach New York. Sie will dort Urlaub machen.</text> <tokens> <token ID="t_0">Karin</token> <token ID="t_1">fliegt</token> <token ID="t_2">nach</token> <token ID="t_3">New</token> <token ID="t_4">York</token> <token ID="t_5">.</token> <token ID="t_6">Sie</token> <token ID="t_7">will</token> <token ID="t_8">dort</token> <token ID="t_9">Urlaub</token> <token ID="t_10">machen</token> <token ID="t_11">.</token> </tokens> </TextCorpus> </D-Spin>

Now let's see how the document is produced. First, we need to create a TextCorpusData object that will automatically handle TCF creation. To create a TextCorpusData object that doesn't consume and only produces TCF you need to specify:

- OutputStream (or OutputStreamReader) into which TCF is going to be written;

- annotation layers you want to write into TCF, and the language of the TCF document:

FileOutputStream fos = new FileOutputStream(fileNameOut);

LayerTag[] layersToWrite = new LayerTag[]{LayerTag.TEXT, LayerTag.TOKENS};

TextCorpusData tc = new TextCorpusData(fos, layersToWrite, lang);

Now you can add annotation layers (that you have specified) into the document. This is how to add a text layer into TCF:

tc.writeTextLayer(text);

In case of more complex annotation layers you would first need to create objects that correspond to the annotation layer sub-elements. For example, the tokens layer consists of token elements and you need to create list of tokens before you can write a tokens layer into TCF. To create objects that correspond to such elements you need to use a TextCorpusFactory object from the corresponding TextCorpusData object.

TextCorpusFactory tcf = tc.getFactory();

List<Token> tokens = new ArrayList<Token>();

for (String tokenString : tokenizedText) {

Token token = tcf.createToken(tokenString);

tokens.add(token);

}

Now we can write the tokens layer:

tc.writeTokensLayer(tokens);

After TextCorpusData object has received all the layers that were specified to be written, it closes the OutputStream and the TCF document is ready.

In the same way you can add any other annotation layers into the document.

LayerTag

enumeration represents all the linguistic layers, available in TCF0.3. If you want

to develop a service that produces a layer other than the ones listed, please contact us

with the suggestion.

It is important that you add exactly the layers that you have specified when

creating the TextCorpusData object. If you try to add more than you have

specified an exception will be thrown. If you fail to add one or more layers, the OutputStream will never

close as it will be waiting for more layers to be written.

Consuming and producing linguistic annotations from/into TCF

POSTaggingTextCorpusSample.java (in the src/ folder) shows how to create TCF document when you have TCF input with linguistic annotations that you process to build new linguistic annotations.

The sample consumes the TCF document tcf_with_text_toks.xml (in the data/ folder). The document contains text, tokens, lemmas and sentence annotations. The linguistic annotations we are going to add into the TCF are part-of-speech annotations. The part-of-speech tagger used in the sample needs to extract only the token strings from the input document in order to assign part-of-speech tag to the tokens, so it is interested only in tokens layer and can ignore all other layers in the input document.

The sample consumes TCF document tcf_with_text_toks.xml that you can find in data/ folder

String fileNameIn = "data/tcf_with_text_toks.xml";

The sample produces TCF that we will save in:

String fileNameOut = "data/OUT-tcf_with_text_toks_pos.xml";

Use a POSTaggingTextCorpusSample object to process a TCF document:

POSTaggingTextCorpusSample sample = new POSTaggingTextCorpusSample();

sample.process(fileNameIn, fileNameOut);

Compile and run the POSTaggingTextCorpusSample class.

It produces the valid TCF output that you can inspect in data/OUT-tcf_with_text_toks_pos.xml, you can see that TCF is the same as in data/tcf_with_text_toks.xml plus the POSTags layer is added:

<?xml version="1.0" encoding="UTF-8"?> <D-Spin xmlns="http://www.dspin.de/data" version="0.3"> <MetaData xmlns="http://www.dspin.de/data/metadata"> <source></source> </MetaData> <TextCorpus xmlns="http://www.dspin.de/data/textcorpus" lang="de"> ... <tns:POStags tagset="STTS"> <tns:tag tokID="t1" ID="pt_0">NN</tns:tag> <tns:tag tokID="t2" ID="pt_1">VVFIN</tns:tag> <tns:tag tokID="t3" ID="pt_2">APPR</tns:tag> <tns:tag tokID="t4" ID="pt_3">NE</tns:tag> <tns:tag tokID="t5" ID="pt_4">NE</tns:tag> <tns:tag tokID="t6" ID="pt_5">$.</tns:tag> <tns:tag tokID="t7" ID="pt_6">PPER</tns:tag> <tns:tag tokID="t8" ID="pt_7">VVFIN</tns:tag> <tns:tag tokID="t9" ID="pt_8">ADV</tns:tag> <tns:tag tokID="t10" ID="pt_9">NN</tns:tag> <tns:tag tokID="t11" ID="pt_10">VVINF</tns:tag> <tns:tag tokID="t12" ID="pt_11">$.</tns:tag> </tns:POStags> ... </TextCorpus> </D-Spin>

Now let's see how the input document is processed and output document is produced. First, we need to create a TextCorpusData object that will automatically handle TCF reading/writing. To create TextCorpusData object that consumes input TCF and produces output TCF you need to specify:

- InputStream (or InputStreamReader) from which TCF is going to be read;

- OutputStream (or OutputStreamReader) into which TCF is going to be written;

- annotation layers you want to read from TCF input;

- annotation layers you want to add into TCF output:

FileInputStream fis = new FileInputStream(fileNameIn);

FileOutputStream fos = new FileOutputStream(fileNameOut);

LayerTag[] layersToRead = new LayerTag[] {LayerTag.TOKENS};

LayerTag[] layersToWrite = new LayerTag[] {LayerTag.POSTAGS};

TextCorpusData tc = new TextCorpusData(fis, layersToRead, fos, layersToWrite);

Now you can get the data from the layers that you have specified for reading. In our case it is only the tokens layer:

List<Token> tokens = tc.getTokensLayer().getTokens();

Now you can process the tokens and create annotation layers (that you have specified) adding them into the document. In our case we add only one layer, the part-of-speech tags layer. Each part-of-speech layer consists of a list of part-of-speech tags. Each part-of-speech tag is created using a TextCorpusFactory object obtained from the corresponding TextCorpusData object. It is also necessary to reference the token by specifying the token ID of the token the tag refers to:

List<Tag> posTags = new ArrayList<Tag>();

TextCorpusFactory tcf = tc.getFactory();

for (Token token : tokens) {

String tokenString = token.getTokenString();

String tag = tagger.tag(tokenString);

Tag posTag = tcf.createTag(tag, token.getID());

posTags.add(posTag);

}

Now we can write the part-of-speech layer. It is necessary to specify the part-of-speech tagset of the tagging:

String tagsetUsed = "STTS";

tc.writePOSTagsLayer(posTags, tagsetUsed);

After the TextCorpusData object has received all the layers that were specified to be written, it closes the OutputStream and the TCF document is ready.

In the same way you can add any other annotation layers into the document. THe

LayerTag

enumeration represents all the linguistic layers available in TCF0.3. If you want

to develop a service that produces a layer other than one of these, please contact us

with the suggestion.

It is important that you add exactly the layers that you have specified when

creating the TextCorpusData object. If you try to add more than you have

specified an exception will be thrown. If you fail to add one or more layers,

the OutputStream will never

close as it will be waiting for more layers to be written.