Tokenizer and Sentence Boundary Detector Service

From WebLichtWiki

Contents |

Introduction

This tutorial presents a workflow for creating a webservice for TCF processing. It shows a basic tokenizer and sentence boundaries detector service. The service processes POST requests containing TCF data with a text layer. It uses the text from the text layer to produce token and sentence annotations.

This web-service imitates the case when its processing tool object is not expensive to create and it does not consume a lot of memory. In such a case the best way is to create the tool object with each client POST request, as shown in the service implementation.

This web-service also demonstrates two ways of returning the TCF output in HTTP response: as a streaming output and as an array of bytes. Both ways have their advantages and disadvantages. In case of returning bytes array the implementation is simpler, but the whole output TCF is hold in memory at once, so in case the TCF output is big, the server might run out of memory. In case of returning streaming output the implementation is slightly more complicated, but TCF output is streamed and only a part of the output is hold in memory at a time. This makes it possible to handle TCF output of bigger size.

Prerequisites

The tutorial assumes you have the following software installed:

- NetBeans IDE 7.2.1

- wget or curl command line tool (optional)

Adding Clarin Repository

The example WebLicht Service is provided as Maven Archetype stored in Clarin Repository. Therefore, you'll need to add Clarin Repository to your list of Maven Repositories. Skip this step if Clarin Repository is already among your Maven Repositories.

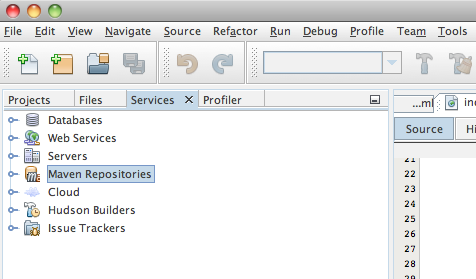

In NetBeans IDE, go to the list of Maven Repositories under the Services tab:

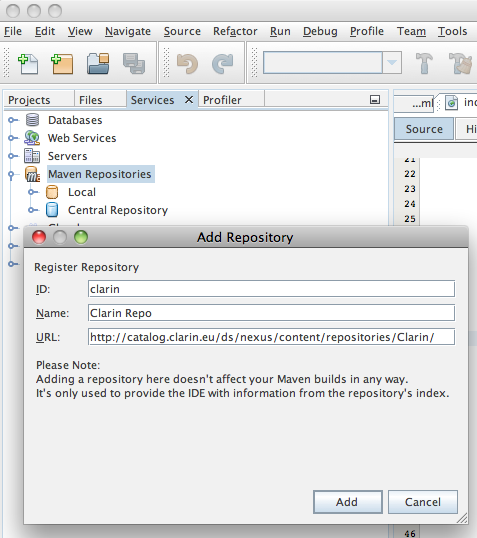

Right-click on "Maven Repositories" and select "Add Repository" option. Fill in the following information in the "Add Repository" window:

- Repository ID: clarin

- Repository Name: Clarin Repo

- Repository URL: http://catalog.clarin.eu/ds/nexus/content/repositories/Clarin/

Finish by pressing "Add"

Creating a Project from an Archetype

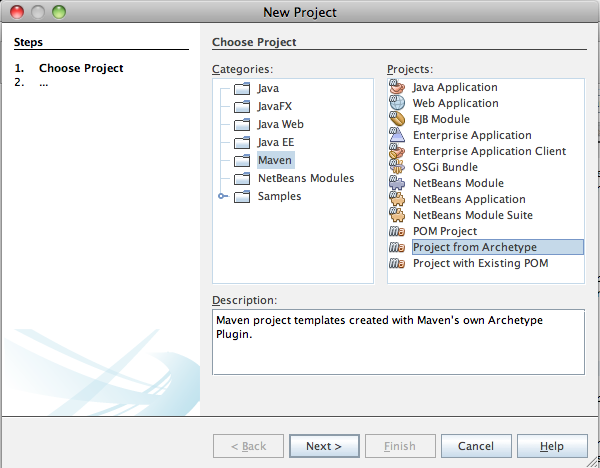

Once the Clarin Repository is accessible, we can start using the archetype at once. Press the "New Project" button in the menu bar and select: Maven -> Project From Archetype

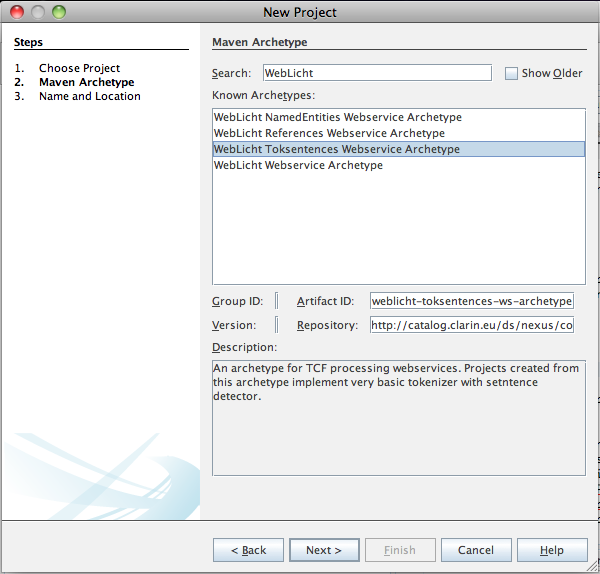

In the next screen find and select "WebLicht Toksentences Webservice Archetype"

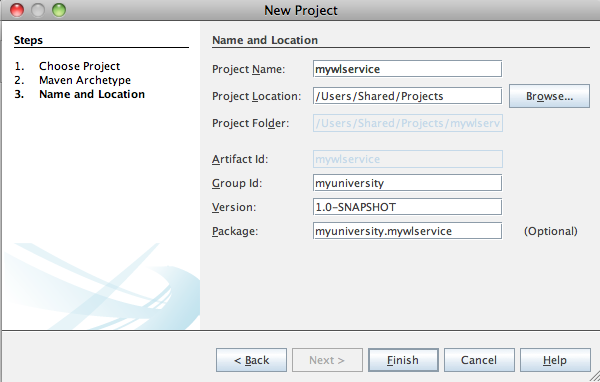

Provide a name for your project, a directory to store it in as you would normally do with any NetBeans project. In addition, you have a possibility to provide a group name for your maven artifact and a package name you would like to use.

That's it! You have just created a WebLicht webservice.

Testing Webservices

To test the service, run it on your local server. Right-click on the project and select "Run" option. In the next screen select Tomcat server and click OK button.

The most straightforward way to test a webesrvice is to use wget or curl command line tool. For example, to POST to the service TCF data from "input.xml" and display the output of the service in the terminal window, run curl:

curl -H 'content-type: text/tcf+xml' -d @input.xml -X POST http://localhost:8080/mywlproject/annotate/bytes

curl -H 'content-type: text/tcf+xml' -d @input.xml -X POST http://localhost:8080/mywlproject/annotate/stream

Or wget:

wget --post-file=input.xml --header='Content-Type: text/tcf+xml' http://localhost:8080/mywlproject/annotate/bytes

wget --post-file=input.xml --header='Content-Type: text/tcf+xml' http://localhost:8080/mywlproject/annotate/stream

Make sure you actually have a file named "input.xml" in TCF0.4 format containing text layer in the current directory. Such a file, provided for testing, is located under "Web Pages" in your project, just copy it to your current directory.

What's next?

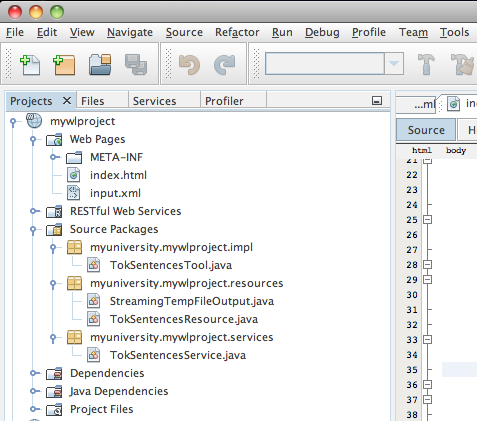

Of course you would probably like to customize the provided code. Let's take a look at the files we have in the project:

- TokSentencesService.java

- Here, the application definition resides, use it to define the path to your application and/or add more resources. In this example, a resource TokSentencesResource is added as Singleton resource. It means that only one instance of the resource will be created for the application.

- TokSentencesResource.java

- This is the definition of a resource, in case more resources are required you can use it as a template for any further resources. (Don't forget to add them to the TokSentencesService.java)

- In this example the resource has subresource methods that correspond to the request path of the request URL "annotate/stream" and "annotate/bytes". The methods implement the same processing (they both call the same process() method), the only difference between them is in their return type. Refer to Response type section to decide which one of those methods you want to use in your resource implementation.

- The process() method instantiates TokSentencesTool object. It means that a new instance of the tool object will be created for each client request. This should be done only in cases when the tool object is not expensive to create and it does not consume a lot of memory. For other cases please refer to Instantiation of resources section.

- TokSentencesTool.java

- Here, an actual implementation of a tool resides. In this template a simple tokenizer and sentence boundary detector implementation is provided. In case you are writing a service wrapper for already existing tool, here is where you would call your tool, translating input/output data from/into TCF format. Here, the wlfxb library can be of a help, as used in this resource implementation.